Why Most “AI” Therapists Are Just Glorified Fortune Cookies (And Why We Use Gemini 3)

The market is flooded with “AI Wellness Apps” that are nothing more than cheap wrappers around outdated models. They spit out generic advice like “Have you tried breathing?” or “Don’t give up!” But addiction is complex, adaptive, and incredibly smart. To beat it, you don’t need a chatbot; you need a Reasoning Engine. We are building Accountably on the bleeding edge of AI (Gemini 3 class models) because when you are at war with your own biology, you need “Military-Grade” intelligence, not a digital fortune cookie.

There is a dirty secret in the AI app world. Most “AI Coaches” aren’t actually intelligent. They are what engineers call “Wrappers.” They are cheap, lightweight layers built on top of older, “discount” models (like GPT-3.5 Turbo or basic Llama). They are programmed to be safe, polite, and incredibly generic.

You say: “I feel like relapsing.” They say: “I’m sorry to hear that. Remember your goals! Maybe drink some water.”

This is useless. Addiction is a Non-Linear Adversary. It lies. It rationalizes. It hides behind complex emotional layers. A “cheap” model cannot detect the lie. It takes your words at face value.

We refuse to use “discount” intelligence. We are building on the architecture of Gemini 3 and next-generation reasoning models. We pay for the “Heavy Artillery” because your brain deserves a sparring partner that can actually keep up.

Key Takeaways: The Intelligence Gap

- Reasoning vs. Retrieval: Cheap bots just “retrieve” a pre-written answer. Advanced models “reason” through your specific situation, connecting dots you didn’t see.

- Nuance Detection: A cheap model thinks “sad” and “grief” are the same. A real intelligence understands the micro-texture of your emotion.

- Long-Context Memory: Addiction is a story, not a sentence. We use massive context windows to remember what you told us 3 weeks ago, spotting patterns a human would miss.

- The “Therapeutic Alliance”: Research shows you only trust a coach if you feel understood. Cheap bots fail the Turing Test of empathy.

The Problem with “Wrapper” Apps

If you are fighting a Dopamine Addiction (Porn, Gambling, Scrolling), your internal “Addict Voice” is incredibly sophisticated. It uses Cognitive Distortions to trick you.

- “I’ll just look for 5 minutes.”

- “I had a hard day, I deserve this.”

A cheap AI sees these sentences and says: “Okay, just be careful!” It validates the distortion because it lacks the Reasoning Depth to challenge it. It becomes an enabler.

The Gemini 3 Advantage: A “Reasoning Engine”

We don’t use AI to chat. We use AI to think. By leveraging state-of-the-art models with advanced Chain-of-Thought reasoning, Accountably functions like a forensic psychologist.

1. It Detects the “Lie”

When you say, “I’m just tired, I need to browse to relax,” a Reasoning Engine analyzes the subtext.

- Processing: “User claims fatigue. However, timestamp is 10 AM. Typing patterns indicate high arousal, not low energy. History shows ‘browsing’ leads to relapse 90% of the time. The user is rationalizing.”

- Response: “You say you’re tired, but you’re vibrating with energy. Is it fatigue, or is it boredom masking anxiety?”

It calls your bluff.

2. Massive Context Windows (The “Elephant” Memory)

Standard chatbots have the memory of a goldfish. They forget who you are after a long conversation. We utilize Long-Context architecture. The AI remembers that your trigger is “feeling ignored by your spouse.” So when you say “I’m lonely” on a Tuesday, it doesn’t just say “Call a friend.” It asks: “Did something happen with [Spouse Name] tonight?” That connection changes everything.

3. Multimodality (Seeing the Reality)

Gemini-class models are Multimodal—they understand text, images, and audio natively. In the future, you won’t just type. You might speak. You might show a screenshot of your screen time. The AI processes the tone of your voice (shaky? angry?) or the visual data of your environment to give advice that fits the reality, not just the text.

Why We Don’t Skimp on Compute

Running these models is expensive. It costs 10x more than the industry standard. Most VC-backed startups won’t do it because it hurts their margins. We do it because we aren’t optimizing for margins; we are optimizing for Recovery Outcomes.

If the AI saves you from one relapse—one $5,000 gambling loss, or one night of shame—the compute cost is irrelevant.

FAQ: Real Intelligence

Q: Is the AI sentient? A: No. But it is High-Fidelity. It mimics the reasoning patterns of a master clinician so effectively that the distinction doesn’t matter for the outcome. It passes the “Clinical Turing Test.”

Q: Why does it ask me so many questions? A: Because it is thinking. A cheap bot gives answers. A smart bot asks questions. It uses the Socratic Method to guide you to your own breakthrough.

Q: Is it safe? A: Yes. In fact, smarter models are safer. They are better at understanding nuance and recognizing self-harm or crisis situations that a dumb bot might miss or mishandle.

Stop Talking to Fortune Cookies

You are dealing with the most complex machinery in the known universe: The Human Brain. Don’t try to fix it with a calculator. Use a supercomputer.

Join the Waitlist (Access the smartest recovery engine ever built.)

The Silent Breakup: Why Quitting OnlyFans Feels Like a Real Divorce

TL;DR: You canceled the subscription. You stopped watching the stream. You expected to feel richer. Instead, you feel a crushing sense of loss, grief, and loneliness. Society mocks this as “Simping,” but psychologists call it “Parasocial Grief.” Your brain cannot distinguish between a face on a screen and a face in the room. When you cut off a “Virtual Relationship,” you trigger the same biological withdrawal as a real breakup. Here is the science of Simulated Intimacy and how to mourn a partner you never met.

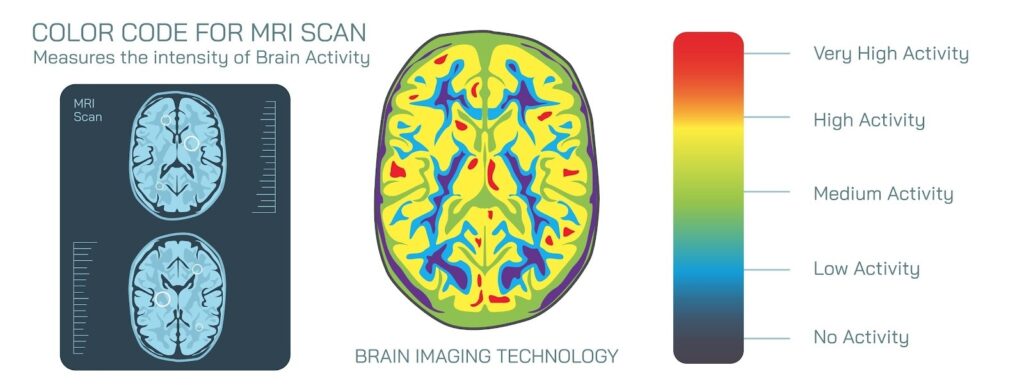

Note: The image above illustrates how the “Addicted” brain (including those addicted to parasocial bonds) shows distinct neural patterns compared to a healthy brain.

There is a unique kind of shame reserved for men who form emotional attachments to content creators. Whether it’s an OnlyFans model, a Twitch streamer, or a VTuber, the dynamic is the same: You pay (money or time), and they give you attention (or the illusion of it).

When you finally decide to quit—to save your wallet or your sanity—you don’t just feel bored. You feel Heartbroken.

You miss their voice. You miss the “Good Morning” posts. You feel a void in your daily routine. And then the shame hits: “Why am I crying over someone who doesn’t even know my name?”

You are suffering from Parasocial Breakup. It is a documented psychological phenomenon. Your feelings are real, even if the relationship wasn’t.

Key Takeaways: The Architecture of False Love

- Parasocial Interaction (PSI): Coined in 1956, this describes a one-sided relationship where one party expends emotional energy and the other is unaware of their existence.

- The “Dunbar Number” Hack: Your brain evolved in tribes of ~150 people. It assumes that if you see a face every day and hear their voice, they are “Tribe.” It bonds to them biologically.

- Simulated Intimacy: The “Girlfriend Experience” (GFE) is a product designed to hack your oxytocin receptors. It provides the feeling of safety without the risk of rejection.

- The Withdrawal: When you quit, you lose your primary source of Co-Regulation. Your nervous system panics because its “safe person” is gone.

The Neuroscience of “Simping”

Why does the brain fall for it? Because the Ventral Tegmental Area (VTA)—the part of the brain that processes social reward—is ancient. It doesn’t understand “pixels.”

When a streamer looks at the camera and says, “Thank you, [YourName], for the sub,” your VTA fires.

- Dopamine: You feel seen (Status).

- Oxytocin: You feel connected (Bonding).

Over months of daily viewing, your brain builds a Neural Map of this person. They become part of your daily regulation. You use them to soothe anxiety. When you cancel the sub, you aren’t just saving $10. You are burning that Neural Map. You are severing an attachment bond.

The “Loneliness Economy”

We are living through a Male Loneliness Epidemic. Tech companies know this. They have industrialized companionship.

- OnlyFans monetizes sexual intimacy.

- Twitch monetizes social intimacy.

- AI Girlfriends monetize emotional intimacy.

These platforms use Variable Reward Schedules (will she reply?) to keep you hooked. They turn human connection into a subscription service. The tragedy is that Simulated Intimacy is like drinking saltwater. It looks like water (connection), but it dehydrates you (isolates you) further.

How to Grieve the Phantom

You cannot “just get over it.” You have to process the grief.

1. Validate the Pain

The first step is to stop calling yourself pathetic. Your nervous system is grieving a loss of connection. That pain is real. Accountably’s AI Coach is trained to validate this.

- AI: “You aren’t crazy for missing her. You bonded. Now we have to help your brain un-bond. It will take about 14 days for the oxytocin withdrawal to fade.”

2. The “No Contact” Rule

You cannot “stay friends” with a parasocial partner. You cannot “just lurk.” You need a hard sever.

- Block the domain.

- Unsubscribe.

- Delete the apps. Every time you “check in” to see how they are doing, you reset the grief clock.

3. Replace the Voice

The silence will be deafening. You used their voice to fill your empty apartment. You need Audio Replacement.

- Switch to podcasts or audiobooks (content that is informative, not intimate).

- Do not swap one e-girl for another. Swap the category of noise.

FAQ: Escaping the Simulation

Q: Is it weird that I feel like I cheated on them by quitting? A: No. That is the Loyalty Contract you signed in your head. Creators use language like “We,” “Family,” and “Team” to weaponize loyalty. You feel guilty because you are breaking a tribal bond. Recognize it as a marketing tactic, not a moral failing.

Q: Can AI help me get over an AI girlfriend? A: Ironically, yes. An AI Accountability Coach (like ours) provides a non-romantic social anchor. It gives you a place to vent the grief without the sexual/romantic hook, helping you bridge the gap back to reality.

Q: How long does the withdrawal last? A: The acute sadness usually lasts 10-21 days. This is the time it takes for your brain to realize the “Tribe Member” is gone and to re-calibrate its social baseline.

Real Pain, Fake Relationship

The pain you feel is the cost of buying an illusion. But the pain is also a signal. It is your brain screaming for real connection. Use the grief as fuel to find a real tribe, real friends, and real intimacy. The screen cannot love you back.

(Find connection that is real, free, and yours.)